Section: New Results

Scenario Recognition

Participants : Inès Sarray, Sabine Moisan, Annie Ressouche, Jean-Paul Rigault.

Keywords: Synchronous Modeling, Model checking, Mealy machine, Cognitive systems.

Activity recognition systems aim at recognizing the intentions and activities of one or more persons in real life, by analyzing their actions and the evolution of the environment. This is done thanks to a pattern matching and clustering algorithms, combined with adequate knowledge representation (e.g scene topology, temporal constraints) at different abstraction levels ( from raw signal to semantics).Stars has been working to ameliorate and facilitate the generation of these activity recognition systems. As we can use these systems in a big range of important fields, we propose a generic approach to design activity recognition engine. These engines should continuously and repeatedly interact with their environment and react to its stimuli. On the other hand, we should take into consideration the dependability of these engines which is very important to avoid possible safety issue, that’s why we need also to rely on formal methods that allow us to verify these engines behavior. Synchronous modeling is a solution that allows us to create formal models that describe clearly the system behavior and its reactions when it detects different stimuli. Using these formal models, we can build effective recognition engines for each formal model and validate them easily using model checking. This year, we adapted this approach to create a new simple scenario language to express the scenario behaviors and to automatically generate its recognition automata at compile time. This automata will be embedded into the recognition engine at runtime.

Scenario description Language

As we work with non-computer-science end-users, we need a friendly description language that helps them to express easily their scenarios. To this aim, we collaborated with Ludotic ergonomists to define the easiest way for a simple user to deal with the new language. Using AxureRP tool, we defined two types of language:

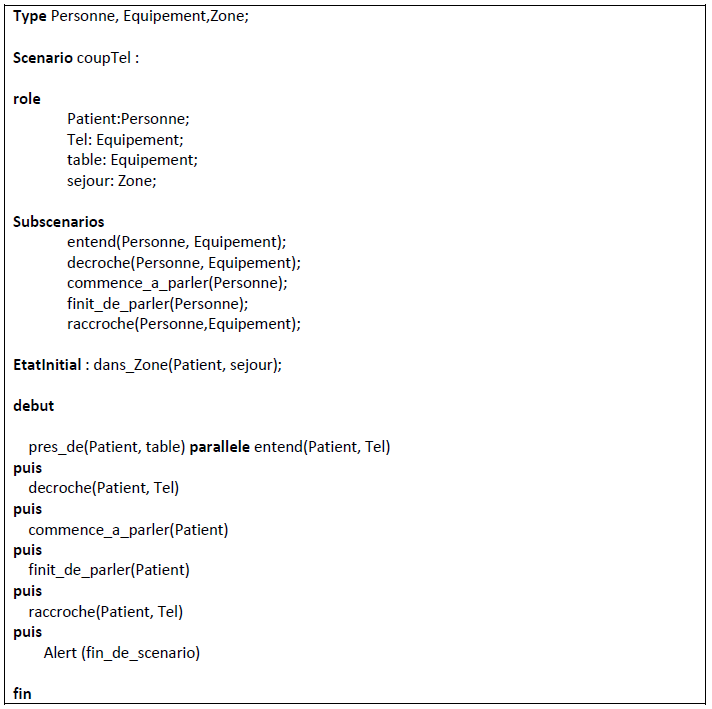

1- Textual language:

For the textual language, we decided to use a simple language. Using 9 operators, and after the definition of the types, roles, and sub-scenarios, the user can describe a scenario in a simple way, such as in figure21.

This year, we implemented this textual language and it is under testing.

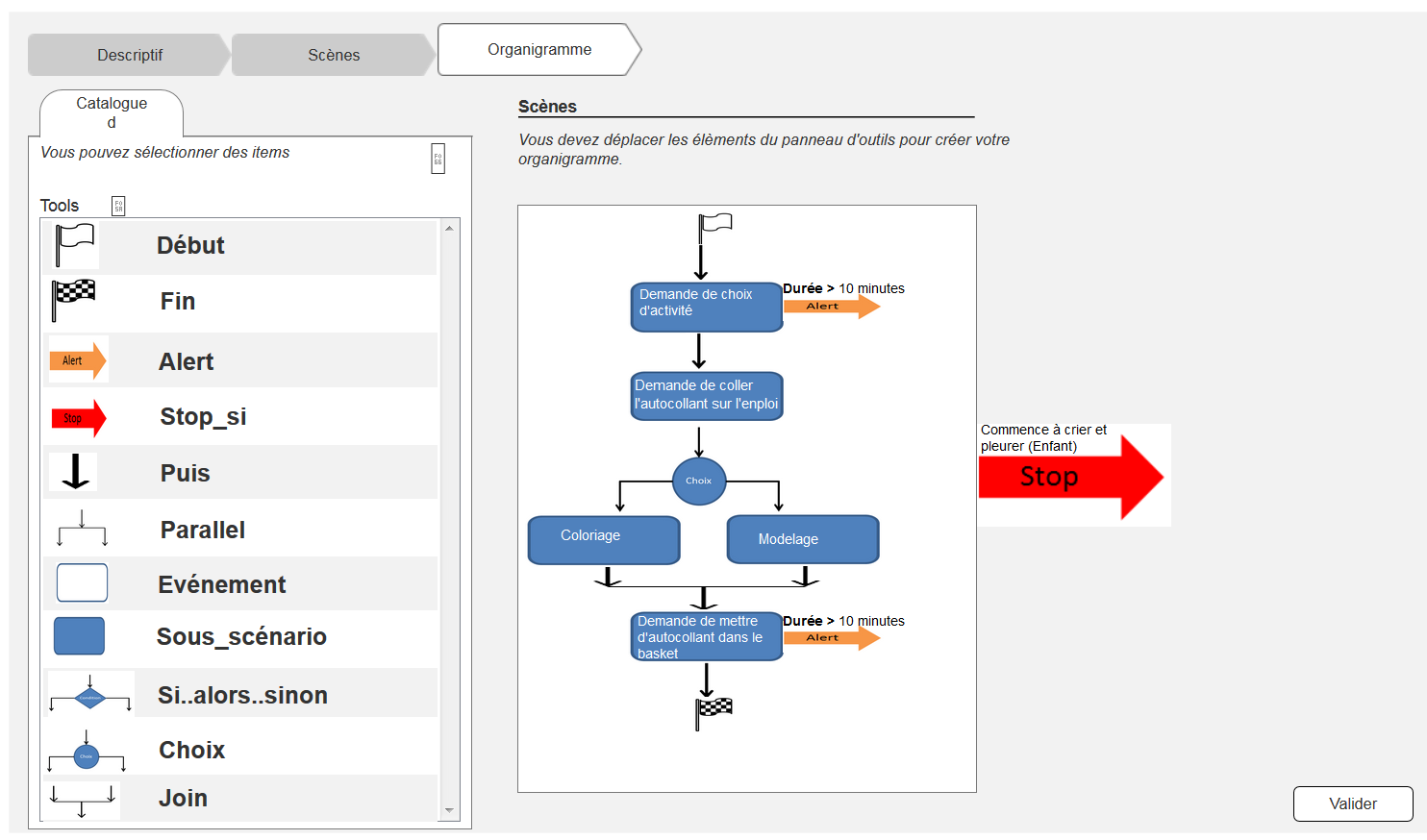

2)- Graphical language:

The graphical language model has 3 basic interfaces: The first interface allows the user to define the types, roles, and the initial state of the scenario. The second one is dedicated to describe the sub-scenarios and to express simple scenarios using a timeline. In case of complicated scenarios, the third interface offers users a tool panel that allows them to describe their scenarios in a hierarchical way using a flowchart-like representation (see figure 22).

Recognition Automata

This year, we worked also on recognition automata generation. We used the synchronous modeling and semantics to define these engines. The semantics consists in a set of formal rules that describe the behavior of a program. We specified first the language operators: we rely on a 4-valued algebra with a bilattice structure to define two semantics for the recognition engine: a behavioral and equational one. A behavioral semantics defines the behavior of a program and its operators and gives it a clear interpretation. Equational semantics allows us to make a modular compilation of our programs using rules that translate each program into an equation system. After defining these two semantics, we verified their equivalence for all operators, by proving that these semantics agree on both the set of emitted signals and the termination value for a program P. We implemented these semantics and we are now working on the automatic generation of the recognition automata.